With Paul Reitter and Paul North’s new translation of Capital, Volume 1, socialists across the country are finding an excuse to dive into Marx’s monumental work. DSA is hosting a national reading group (translation agnostic!) and your local chapter may be organizing local sessions to follow along in more depth and build local connections. The best time for a socialist to start reading Capital was yesterday, but the next best is today!

When you buy your coffee in the grocery aisle, you buy nothing more than a simple commodity, exchanging your money for a product whose price has appeared as if from thin air. Playing the role of a consumer, you don’t tend to care about the history behind the coffee: who grew and picked it, who roasted it, who stocked it on the shelves. In most cases, the commodity you purchase and consume has already gone through a series of purchases and consumptions in the course of its production: the water, seeds, land, and labor needed to grow and pick the beans; fuel, equipment, and yet more land and labor to run the roastery; and finally the building, shelving, and, once more, land and labor to stock the product next to the tea for you to select and process your payment as the end consumer. Perhaps you have personal political commitments that may help you resist the erasure of these intermediate processes, and you change your selection based on helpful notes on the coffee packaging, but, for the most part, the nature of the commodity is to collapse these interactions into one simple indicator: the price you pay in the market. Inside the price is a history of all these purchases and transformations.

I was not yet a Marxist the first time I deeply considered how our contemporary society hides these relationships. I was taking a college class on computer systems, which outlined the basic principles of computation. The class explored subjects like how logic circuits get built to support machine code or how programming languages provide interfaces to machine code to develop programming languages, network protocols, and then industrial-scale software engineering. I distinctly remember realizing in that class that computers are, at their core, built on a principle of abstraction that hides the social character of the labor processes needed to create them. By design, using a computer requires little to no knowledge of its history—the labor that went into the physical and virtual construction—mining and extracting minerals needed to build the physical devices, designing the various components that make up computation equipment, and programming software to run applications.

However, reading Karl Marx’s Capital finally gave me language for—and a larger framework to understand—these histories of labor embedded in modern computation. What I first observed in that college class was an instance of what Marx describes as the “fetish character of commodities.” “Fetish” here refers to objects attaining mystical or religious powers (and having nothing to do with paraphilias). In Marx’s explanation, commodities become “fetishized” because price is assumed to be an intrinsic characteristic of any object rather than a signal that collapses the accumulation of social relationships into a single number. The price, already an abstraction, gains a fetish character when it is presented as a fundamental character of the object, mystically appearing from a nebulous somewhere (such as the imagined, ideal market) instead of acknowledging all the real, messy, and complicated processes behind the object.

Through Marx, I saw how the transition from workshop production to heavy industry mirrored the governing logic of modern computation that generates a dizzying scale of human collaboration, mediated through spontaneous interactions in the market—with working-class exploitation hidden in the background through the entire process. Rather than breaking from earlier systems of production, computational logic has been able to dominate 21st century logics of production and distribution precisely because its principles of abstraction mirror the fetish character of commodities under capitalism. Technologies like artificial intelligence, which could greatly augment the human capacity to produce useful objects and solve real problems, are now instead being used in a mad dash to lay off workers and replace them with cloud computing technology that threatens to erase humanity’s meager progress towards mitigating climate change.

Early attempts to solve this alienation within the software community also mirror early Utopian socialist attempts to reason away the contradictions of capitalism. And, like Utopian socialism, these projects in the software movement are doomed to be subsumed and destroyed by capitalism. Instead, we must apply Marx’s critique of capital’s inner logic in Capital to modern computation. Doing so reveals that the alienation of software and the alienation of capitalism are the same, and understanding both should begin with understanding Marx’s Capital.

Early computing and workshop production

Just like early workshop production of other commodities under capitalism, early computing projects were localized in single shops run by a few collaborating engineers, whether that was in industrial, military, or university settings. At the time, though computers were not yet produced as consumer-facing commodities, early engineers already used programming techniques that relied on abstraction to make production more efficient.

In Capital, Marx compares early handicraft production with Dafoe’s Robinson Crusoe and the eponymous character’s personal recordkeeping. Because Robinson must industriously produce food, shelter, etc. with only the hours available to him in a day, he sets out to use his labor time as frugally as possible to produce enough to survive. His relations are not social in nature, but he is still driven to economize by the natural limits of the 24-hour day, finding repeatable processes that he can follow to labor within his allotted time.

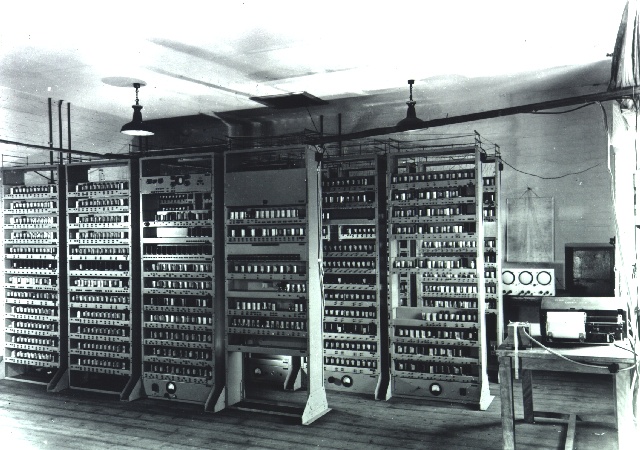

Cambridge University’s Maurice Wilkes, David Wheeler, and Stanley Gill were faced with a similar problem while writing software for the early computer, the Electronic Delay Storage Automatic Calculator (EDSAC). In their programming work, they found many examples where they were writing code that performed the same function but with many different contexts. It was wasted work to write this repeated code, but it also made it more difficult to debug and fix errors.

This led Wilkes to develop the concept of the subroutine library, and he handed the project off to his PhD student, Wheeler, to develop. Subroutine libraries are repeated code snippets that perform one function in multiple places across a given program. They allow even a single programmer to abstract part of their work away, codifying it in a repeatable process with code that can be reused at will. Like Robinson and his ledger, subroutine libraries allowed EDSAC’s programmers to more efficiently utilize their limited labor time for other, more important tasks.

Additionally, subroutines also created a mechanism for more efficient collaboration, where the programming process could be broken down into specific tasks and delegated to individuals. In order to facilitate this process, subroutine library authors needed to sufficiently define and document their code in a way that allows others to use it—but not so thoroughly that it repeats all of the internal complexity, which would make the library pointless. As Wheeler wrote in his 1952 paper “The Use of Sub-routines in Programmes,” fulfilling this requirement involved the “considerable task of writing a description so that people not acquainted with the interior coding can nevertheless use it easily.”

Even within the small scale of the university research laboratory, subroutine libraries abstracted labor away. If a subroutine library is documented thoroughly enough, a programmer doesn’t need to care about the circumstances of the labor process involved: whether professor Wilkes or his PhD student, Wheeler, wrote it, how late its author stayed up working on it due to the deadlines of a thesis schedule, or whether its author collaborated with another PhD student who dropped out of the program due to stress. What matters for the purpose of the programmer who uses it is simply what the subroutine does: if the documentation communicates what it does clearly, and whether the subroutine does that process effectively.

As Wheeler put it in 1952, “the prime objectives to be borne in mind when designing sub-routine libraries are simplicity of use, correctness of codes, and accuracy of description. All complexities should—if possible—be buried out of sight.”

APIs and the “black box” of computation

New developments in computing over the following years led to the amplification of this concept at a greater scale, including across different and sometimes distant hardware and software built on existing programs. This practice created the Application Programming Interface (API), a technology of abstraction that has become a principle of modern computing.

In his 2018 talk “A Brief, Opinionated History of the API,” Joshua Bloch states that an API is supposed “to define a set of functionalities independent of their implementation, allowing the implementation to vary without compromising the users of the component.” The API has become the architecture modern day computers have used to transition from handicraft products into the accumulation of many layers of disparate market actors building in collaboration with one another.

The core concept of an API relies on inputs—the type of data that a software or hardware component will be expected to receive—and outputs—the agreed-upon way that the subroutine will transform those inputs into something new. This input-output contract could be simple: give me two numbers, and I will return their sum. Or it could be complex: give me a photograph, and I will tell you whether it contains a bird. At each layer of abstraction, the ideal input-output agreement should be a “black box,” measured only by its ability to produce correct results with proper characteristics (is it fast enough? Does it use too much battery life?) with minimal “side effects” on other parts of the system.

A computer is based on dozens of layers of these black boxes and input-output agreements: a physical memory system commits to accurately represent a computer’s state, machine code commits to accurately transform the state of that memory, a programming language commits to compile from a more readable syntax into accurate machine code, a code library commits to perform various functions when that code is called, and an operating system commits to allowing various applications to run smoothly on it. Though one person may in some cases understand all of these layers, modern computing projects require many people working in tandem to establish each layer, and efficient hardware and software production requires specialization to be able to work at scale. As a result, the specificities of each layer are abstracted, and, like with subroutines, most programmers only need to consider what each layer does, how well it’s documented, and if it accomplishes its tasks well.

For example, let’s assume Company A, who hires three software engineers, licenses a piece of software or hardware that was produced by Company B’s team of five engineers, with the intent to extend and use it for Company A’s own purposes. The owner of Company A doesn’t care about the process of production over at Company B. They don’t care whether the five Company B engineers used subroutines to save coding time or how many family birthdays those five engineers had to miss in order to work hard to make the program. What they care about are the program’s inputs and outputs—whether it accurately follows instructions and does so in an efficient execution time and with an acceptable cost to purchase.

Though an end-user may buy the single commodity they refer to as “a computer,” what they are buying is the result of this process happening over and over to create a huge number of interlocking hardware and software components, the result of many commodity inputs into the production process, all coordinated by the logic of the API. Even a company that attempts to prioritize vertical integration, like Apple, relies on a complex web of suppliers for its hardware and builds its operating systems as extensions of existing software, like macOS being based on the open source kernel FreeBSD and including other open source software like OpenSSH.

Just as with any commodity, when you interact with software, you interact with all of the thousands of people involved in a complex social production process with a long history. It’s not that social process that the developer engages with, just the mystified form the program takes on. The fetish character of software as a commodity hides the social nature of this production process. Instead of a collaboration between the three engineers of Company A and the five engineers of Company B, the three engineers at Company A appear to be interacting with a product they licensed and extended. Like the fetish character of commodities, the API is mystically imbued with the capacity to convert inputs into outputs with little indication of the labor needed to create and maintain the product.

Web services and artificial intelligence

Web services and artificial intelligence have further expanded these abstractions significantly. Within these sectors, software APIs now act as gateways to production processes of massive scale, and labor exploitation and environmental destruction are obscured behind layers of doors all marked “no admittance except on business.”

As industrial software development grew in scale, a contradiction arose between, on the one hand, software’s status as a product of labor and therefore bearer of value and, on the other, its endless reproducibility. How can software be sold as a commodity if one can pay for it once and use it as many times as one wants? An initial attempt to resolve this contradiction involved the expansion of copyright and the attempt of market players to aggressively enforce this copyright when violations were detected. But, as the internet—rather than software delivered on disks or other physical storage devices—became a more viable channel for distributing software, a new business model emerged.

Under what Robert W. Gehl calls the “perpetual beta,” software updates could be pushed in small increments in the course of a given day (think Google Docs) rather than yearly updates (think Microsoft Word 98). This led to the rise of web software as a service (SaaS), where end users or software firms will pay for particular functionality based on their usage.

What companies pay for with SaaS is not just software, but a broader set of commodity inputs that make it work: the physical infrastructure of server farms, wages for customer support operators, and access to ongoing software updates produced by a team of engineers. The price then represents not just the software, which is hard to squish into the commodity form, but a broader social labor process.

As a consequence, Web APIs became more important because they allowed developers to access SaaS programs with just a few inputs (the URL and any information the service needs) and outputs (the actions the service will perform). For example, instead of paying to license software, Company A can now use Twilio’s SMS API to request that Twilio send a text message to a user and inform Company A’s application of the user’s response, in exchange for a regular fee per use accumulated over the course of a billing cycle.

The API lets Company A incorporate a function to verify the user’s identity via SMS without having to build this functionality from scratch. Instead of hiring their own labor and directing their own projects, they merely need to pay a price.

With the proliferation of web APIs, a single application can access a dizzying amount of functionality, an endless supply of social labor, with one single commodity: money. In most cases, a startup that can achieve impressive results with “only 4-5 employees” does so by accessing this network of interlocking services, transforming their inputs and outputs into a function that is actually the collaboration of untold thousands hidden behind the APIs.

Capitalism has used the rise of so-called “artificial intelligence” to further extend this proliferation of black boxes into new domains of social labor. Artificial intelligence is often just another way to obscure the presence of labor. When a customer visits an Amazon Go store and pulls a bottle of kombucha off the shelf and leaves without visiting a cashier, they aren’t thinking about whether the task of identifying their purchase is performed by machine learning or hundreds of low-wage workers in India watching CCTV feeds. (In reality it is usually a mix of both, with labor outputs eventually being used to improve the system’s automated functions.) And if Wal-Mart licenses Amazon’s technology for its own use, they also will not care about how the black box works or what the working conditions for manual operators, software engineers, or data center technicians are. The only thing that matters for the purchaser is whether the service makes financial sense to implement given the price and efficacy of the results Amazon is able to deliver.

Generative AI such as OpenAI’s GPT follows similar principles, with systems that can take arbitrary text inputs and provide responses that simulate human outputs. These systems are based on a large amount of human labor, with the model trained on a currently unknown set of plaintext that is likely to include copywritten books and refined based on manual supervision from labellers that evaluate the quality of its outputs. This is a continuation of the exploitation of intellectual labor involved in Google’s project to index the web, populated by what Evgeny Morozov calls “the usual suspects: bots, hobbyists, academics, teenagers. But, also, plenty of precarious media professionals who are building their online reputations, hoping to produce ‘viral’ content.”

Though OpenAI offers a consumer interface to its technology called ChatGPT, which is estimated to make up 85% of its revenue, it also makes hundreds of millions of dollars from its API product, allowing companies to access its input-output system remotely and extend it for their own purposes. In addition to their hidden labor exploitation, generative AI systems also represent a major increase in the energy required for cloud computation, threatening to wipe out even the meager progress made around the world towards combating climate change.

Free software and utopian socialism

Under capitalism, production is unavoidably social in nature, with collective work being performed at a massive scale to transform nature’s gifts into useful objects to satisfy human desires. At the same time, the ownership of this production is private in nature. Capitalists organize and control this collective work and hoard their profits even as they increasingly become further removed from the actual process of production. Like the fits and starts of early socialism through utopian commune projects, early attempts to wrestle with these contradictions in the software world have involved the creation of small shelters from the broader competitive environment, which are ultimately doomed for failure.

As capitalist development began to incorporate the technology into the global process of production and circulation, early developers like Richard Stallman established the free software movement to preserve the collaborative spirit of early computing. (This is, of course, ignoring the military history of computing and a rampant culture of sexism and connections to Jeffrey Epstein at MIT and other elite institutions.)

Free software does not mean “free” as in a lack of price, but in the sense of liberty—as Stallman says, “think free as in free speech, not free beer.” Users of free software have the liberty to modify and extend the source code of free software, and crucially, the freedom to further distribute the software.

But the freedom offered to the consumer of free software is, at the same time, a challenge to the freedom of the software’s producer, namely, the freedom to make as much money from their product as possible. Large publicly traded firms performing development at industrial scale simply can’t justify the opportunity cost of derivative works cutting into their bottom line. This has led to software’s eventual trend toward SaaS with its pay-for-use model in order to outcompete free software and attempt to paper over the contradictions involved in software as a commodity.

Like utopian socialist projects, free software advocates outlined a path of individual withdrawal from this exploitative system and the establishment of an alternative model that would win out by its logical clarity and humanistic principles. And, like utopian socialist projects, free software projects have been unable to change broader trends in production.

Large companies may prioritize open-source development largely as a mechanism to produce positive developer relations and recruit new developers to join their workforce. Some firms also release a version of their software in a free and open source form, acting as “freeware” to upsell developers into a more feature-rich version. For example, the software that powers the password manager Bitwarden is open-source and available for any developer to extend, but because self-hosting a personal instance is often prohibitively expensive or complicated, they offer a paid SaaS version for personal or enterprise use. Here, free software acts as a funnel, directing developers back into the pay-for-use model that the broader industry has gravitated towards. Even these free software use cases are fragile, and companies like Google that will champion open-source software when market conditions are more positive will throw those projects away when it’s time to tighten the belt.

As long as capitalism organizes production on a global scale, utopian projects for software development will remain ephemeral. The commodity form and its associated concepts of abstraction in computation are a powerful scaling technology that have demonstrated the ability to outcompete these isolated projects. But at the same time, capitalism will continue to recreate a working class whose interests exist at odds with the capitalist class. Marx’s Capital provides us with the tools to identify and better understand these dynamics.

Escaping this system, however, requires us to resolve the issues we identify. Truly free software requires the ability of producers to freely associate, separated from the fetters of capitalist ownership and the value form placed on production. The answer to this contradiction lies in a political solution: the working-class hegemony needs to control production so that the social relations of production are managed as social relations rather than abstractions. This means we need to win working class control at the level of society as a whole, not in the isolated workshop or open-floor-plan office, but through the widespread revolutionary activity of the entire class working in concert.

Image: The Electronic Delay Storage Automatic Calculator at the University of Cambridge Mathematical Laboratory in England, 1948. Photo copyright Computer Laboratory, University of Cambridge. Shared via the Creative Commons Attribution 2.0 Generic license.